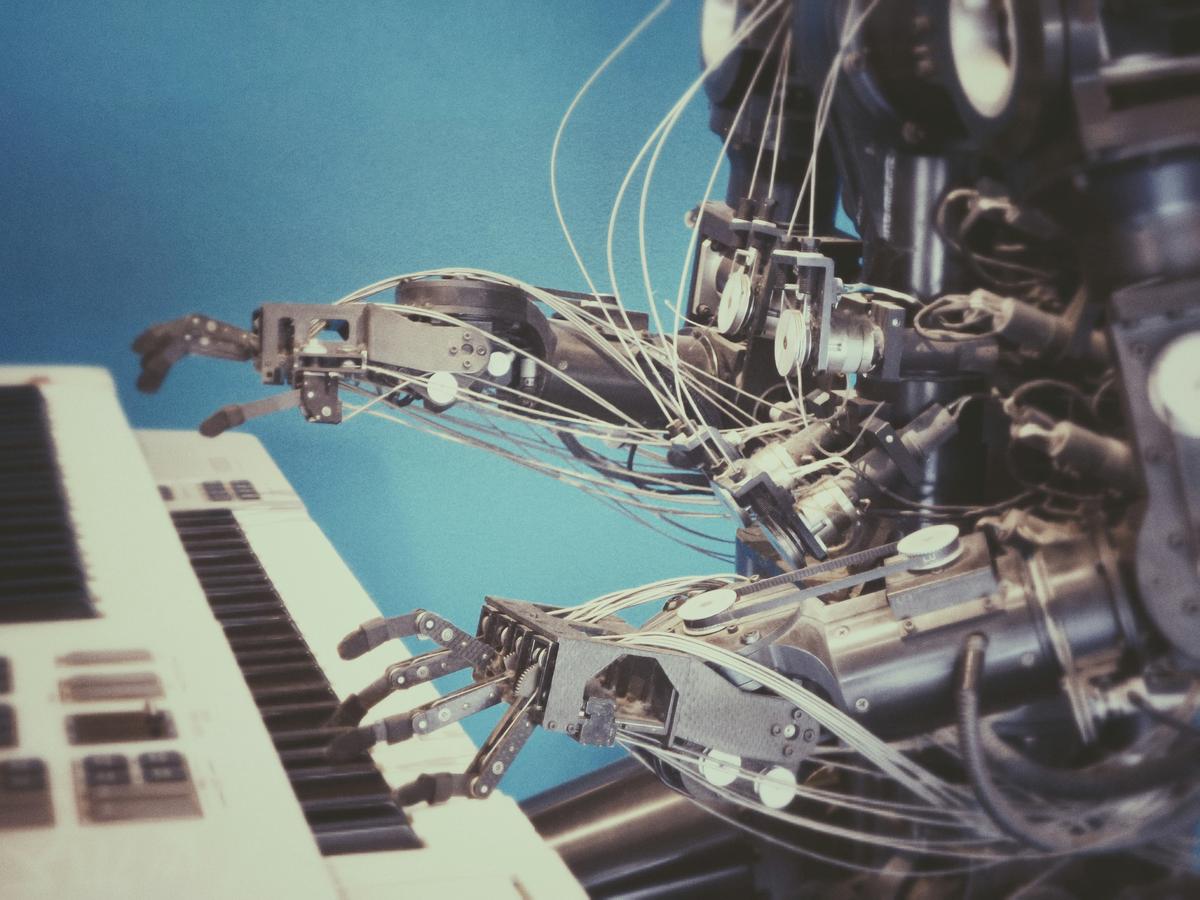

Understanding Generative AI in Music

Generative AI is transforming music composition, blending technology with creativity. Machine learning algorithms like Recurrent Neural Networks (RNNs), Variational Autoencoders (VAEs), and Transformers act as digital composers, crafting melodies without touching an instrument.

RNNs excel at processing musical sequences, recognizing how each note depends on its predecessor. They learn from extensive collections of music to generate compositions that flow naturally.

VAEs explore the structure of music, learning the underlying patterns to produce new compositions with specific characteristics. They're like artists who know the rules but choose to forge their own path.

Transformers analyze complete pieces of music simultaneously, identifying patterns that might elude even experienced musicians. This holistic approach allows them to generate harmonically rich music that evolves seamlessly.

Training these AI models involves feeding them diverse musical datasets. They absorb patterns, chord progressions, and rhythms, learning to generate new pieces that reflect their training while adding a unique twist.

Generative AI in music offers inspiration, efficiency, and collaboration. It can help composers overcome creative blocks, handle repetitive tasks, and explore new musical territories. This partnership between human and machine expands the boundaries of musical possibility, democratizing access to music composition and ensuring creativity continues to flourish.

Benefits of Generative AI in Music Composition

Generative AI offers several advantages in music composition:

- Overcoming creative blocks: AI can suggest new ideas, exploring areas of melody, harmony, and rhythm that might have remained undiscovered.

- Efficiency: The initial work of laying down tracks becomes streamlined, allowing musicians to focus on refining and embellishing their pieces.

- Genre exploration: AI opens doors to a multitude of styles, enabling artists to experiment with and blend different musical landscapes.

- Collaboration: Musicians can "collaborate" with AI, leveraging its computational prowess to generate and refine musical pieces.

- Personalization: AI can analyze preferences and feedback to craft music that feels custom-made for each listener, fostering a profound connection with the audience.

This blend of human creativity and machine intelligence redefines the creative process, ensuring that innovation in music continues to thrive. Whether you're an aspiring musician or a seasoned composer, the potential to experiment and innovate with generative AI is transformative.

Ethical Considerations and Challenges

Generative AI in music raises several ethical considerations:

- Copyright and ownership: AI-generated music blurs the lines of authorship, calling for a re-evaluation of intellectual property laws.

- Authenticity: Can AI-generated compositions truly convey the depth of human sentiment?

- Transparency: Listeners should know whether they're hearing human or AI-generated music.

- Data bias: AI systems may perpetuate biases present in their training data, potentially marginalizing certain musical forms.

- Impact on human musicians: There's concern about AI displacing composers, session musicians, or producers.

Striking a balance between human creativity and AI innovation is crucial. AI should enhance artistic endeavors, not replace them. By addressing these challenges responsibly, we can harness the power of AI while preserving the human essence of music creation.

"The goal is to use AI as a tool to augment human creativity, not to replace it."1

Applications of Generative AI in Music

Generative AI is being applied in various areas of music:

- Media production: Platforms like AIVA and Soundraw provide personalized, royalty-free music for content creators, enhancing storytelling in visual media.

- Interactive experiences: AI tools create dynamic soundscapes that adapt to user input or environmental factors, transforming listeners into active participants.

- Remixing and covering: Tools like Boomy and Jukedeck help artists revitalize classics or create new renditions of original works.

- Sound design: AI-driven platforms offer a vast array of instruments and sound effects, helping designers craft immersive audio landscapes for games and films.

- Songwriting: AI acts as a digital muse, suggesting musical ideas and helping composers develop their sketches into full compositions.

These applications demonstrate how AI can enhance creativity, open new possibilities, and push the boundaries of musical expression. As we continue to explore these technologies, we're not just preserving traditional music-making but venturing into exciting new territories.

A recent study found that 87% of musicians who used AI in their composition process reported increased productivity and creativity.2 This statistic underscores the potential of AI as a powerful tool in the music industry.

Future Perspectives of AI in Music

The future of AI in music composition is bright with possibilities. As AI becomes more integrated into music creation tools, we're likely to see a transformation in how artists work and create.

Imagine AI-powered tools becoming a standard part of every musician's digital workspace. These tools could provide real-time assistance in:

- Beat creation

- Harmonic structuring

- Lyric writing

Platforms like OpenAI's MuseNet and Google's Magenta are early examples of this trend, hinting at a future where artists can interact with their Digital Audio Workstations (DAWs) using natural language commands.

As AI models evolve, we might see algorithms that not only learn from existing music but innovate in ways that challenge our understanding of creativity. These models could potentially generate music that responds to real-time external stimuli, creating a dynamic interplay between performer and audience.

Cross-domain creativity is another exciting frontier. We might see AI facilitating the synthesis of music with other art forms, such as visual arts or dance. Imagine:

- A ballet performance where AI composes music in sync with the dancers' movements

- An art installation where visuals and sound evolve together in real-time

Personalized music experiences could become more prevalent. AI-powered systems might curate playlists that evolve with your changing moods and preferences, or generate custom tracks for specific activities like workouts. Platforms like Brain.fm already offer a glimpse into this future, creating music to improve focus or relaxation based on listeners' needs.1

These advancements could revolutionize how we consume and engage with music, making it a more intimate and personalized journey. We might see:

- Concerts where the setlist adapts on the fly based on audience reactions

- Virtual reality environments with dynamically shifting soundtracks

As AI becomes more integrated into music creation, we're likely to see new paradigms of collaboration between humans and machines. This could lead to an era where creativity flows more freely, with artists less constrained by technical limitations and free to explore novel auditory landscapes.

"In this future, AI doesn't replace human creativity but amplifies it."

We're stepping into a world where the partnership between human and machine opens up new horizons for musical expression, inviting all of us to be co-creators in this exciting journey.

Photo by possessedphotography on Unsplash

Generative AI is reshaping music composition, blending human creativity with machine intelligence. This partnership opens new horizons for artists, pushing the boundaries of what's musically possible. As we embrace this technological evolution, the future of music promises to be a harmonious blend of innovation and artistry.